We study different relaxations of non-convex functionals that can be found in image processing. Some problems, such as image segmentation, can indeed be written as the minimization of a functional. The minimizer of the functional represents the segmentation.

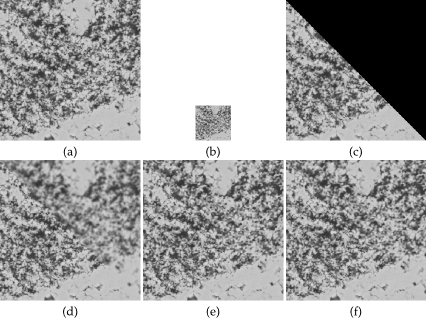

Different methods have been proposed in order to find local or global minima of the non-convex functional of the two-phase piecewise constant Mumford-Shah model. With a convex relaxation of this model we can find a global minimum of the non-convex functional. We present and compare some of these methods and we propose a new model with a narrow band. This models finds local minima while using robust convex optimization algorithms. Then a convex relaxation of a two-phase segmentation model is built that compares two given histograms with those of the two segmented regions.

We also study some relaxations of high-dimension multi-label problems such as optical flow computation. A convex relaxation with a new algorithm is proposed. The algorithm is iterative with exact projections. A new algorithm is given for a relaxation that is convex in each variable but that is not convex globally. We study the problem of constructing a solution of the original non-convex problem with a solution of the relaxed problem. We compare existing methods with new ones.